For node pools using the Ubuntu node image, GPU nodes are available in GKE version 1.11.3 or higher. The TOKEN is used to bootstrap your cluster and connect to it from your node.Ĭopy the. Kubernetes version: For node pools using the Container-Optimized OS node image, GPU nodes are available in GKE version 1.9 or higher. This blog explains what GPU virtualization is, how OpenStack Nova approaches it, and how you can enable vGPU support in Mirantis OpenStack for Kubernetes.

#Using gpu with docker on kubernetes how to#

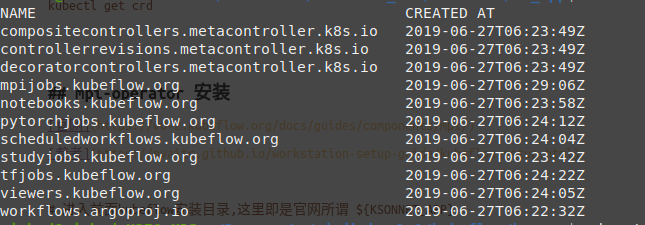

Learn how to use kubeadm to quickly bootstrap a Kubernetes master/node cluster and use a Kubernetes GPU device-plugin to install GPU drivers. In this category of components we have: API Server: exposes the Kubernetes API.It is the entry-point for the Kubernetes control plane. When it’s ready you’ll see two green lights in the bottom of the settings screen saying Docker running and Kubernetes running.

#Using gpu with docker on kubernetes download#

Use this tutorial as a reference for setting up GPU-enabled machines in an IBM Cloud environment. Click on Kubernetes and check the Enable Kubernetes checkbox: That’s it Docker Desktop will download all the Kubernetes images in the background and get everything started up. The newly discovered devices are then offered up as normal Kubernetes consumable resources like memory or CPUs. With the Kubernetes Device Plugin API, Kubernetes operators can deploy plugins that automatically enable specialized hardware support. In a typical HPC environment, researchers and other data scientists would need to set up these vendor devices themselves and troubleshoot when they failed.

The group’s goal was to support hardware-accelerated devices including graphics processing units (GPUs) and specialized network interface cards. The Kubernetes Resource Management Working Group was incubated during the 2016 Kubernetes Developer Summit in Seattle, WA, with the goal of running complex high-performance computing (HPC) workloads on top of Kubernetes. Kind-control-plane NotReady master 60s v1.18.Distributed deep machine learning requires careful setup and maintenance of a variety of tools. However, by extending the kubernetes scheduler module we confirmed that it is possible to actually virtualise the GPU Memory and therefore unlock flexibility in sharing and utilising the available GPU power. Currently, the standard Kubernetes version does not support to share GPUs across pods. : 537063 Build, test, deploy containers with the best mega-course on Docker, Kubernetes, Compose, Swarm and Registry using DevOpsWhat you'll learn. We can create this cluster with: kind create cluster -config my-kind-config.yamlĪfter like thirty seconds you'll be able to verify this with: $ kubectl get nodes -A A guide to GPU sharing on top of Kubernetes. Pretty obviously, this is a config for 1 kubernetes manager node and 3 kubernetes worker nodes. We can control the type of cluster that gets created with a custom kind config file : Kind-control-plane Ready master 4m7s v1.18.2

We can see that with a couple of commands: $ docker container ls -aĬONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESħ8e981f32e68 kindest/node:v1.18.2 "/usr/local/bin/entr…" 4 minutes ago Up 4 minutes 127.0.0.1:39743->6443/tcp kind-control-plane

It is, in my experience, more lightweight than minikube, and also allows for a multi node setup. Kind is a tool that spins up a kubernetes cluster of arbitrary size on your local machine.

0 kommentar(er)

0 kommentar(er)